The Challenge of Testing

It's easy to just jump into testing and simply focus on the low-hanging fruit; it's ok to do this because almost any testing is of value. Test what you are able to test, and you will learn about the state of your software system. A quality program has to start somewhere, and then you learn, revise, and improve your testing approach in a virtuous cycle.

Some common goals of quality programs include running the right tests at the right times.

This is all fairly obvious, and while identifying and writing tests and setting up mechanisms to run them can be difficult and effortful, this isn't a particularly challenging problem.

No, the actual challenge of testing is about planning and defining an effective conceptual mapping of your applications and systems, so that you can properly identify what could be tested. When you know what could be tested, you can then support stakeholders and leadership in deciding what should be tested.

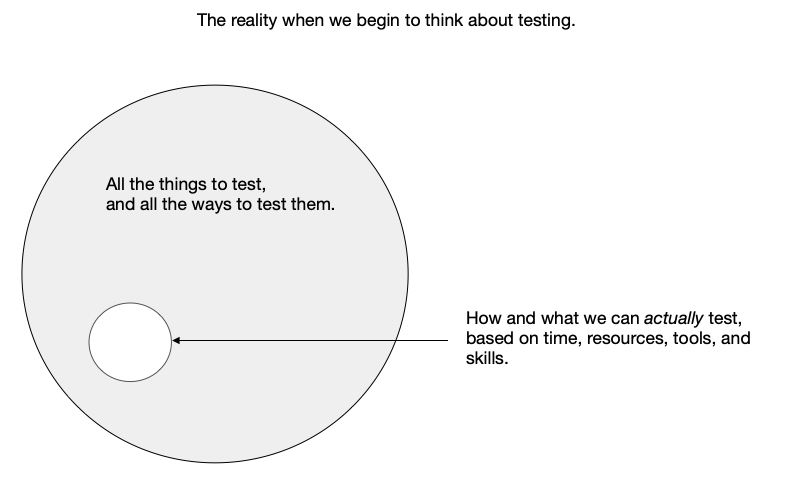

The superset of things we could be doing to test our software products and services (and the systems that create and support them) is so large that it might as well be infinite. If you are choosing arbitrary and low-hanging fruit tests, those tests are disconnected from business priorities and an understanding of business and quality risks; this makes testing without deep planning a poor strategy.

What you want to do is make the space inside the large circle full of known things, even though you cannot test all of them... know them so you can choose among them.

I use this diagram a lot, when I interview or when I explain these concepts to leadership. My common example is that explicit security-focused testing is often not the first thing that test teams think of when starting to identify tests, even though from a business perspective that's a very important test focus. But because security testing is specialized and often complicated, it's not the first thing tested. My point in this essay is that you as a test professional need a methodology to surface the big picture so plans can be made based on business considerations and not happenstance arbitrary easy choices. The challenge of testing is to know this and addressing it effectively.

Putting some effort into this kind of planning yields several concrete benefits:

- You are counteracting the cognitive biases that make you believe your arbitrary ad-hoc planning is awesome and correct.[1]

- You should try to be transparent and collaborative in your planning and prioritization. Fleshing out that superset of what could be tested lets you get feedback on your plans. [2]

- The job of identifying and writing tests is huge, and you know that, but fleshing out what could be tested makes this clear to other people. This provides you with a concrete anchor to argue for more resources and/or more time. [3]

- You have enough information to build a queue of tests to write for manual testing, and separately for test automation.

- You have a conceptual framework that makes it easier to support the testing of new features.

The challenge of testing is to make the unknown unknowns clear enough to allow for educated decision-making.

Now, how to flesh out that superset of things that can be tested and the ways they could be tested is topic of its own. In a separate essay I'll present what I call the functional areas mapping approach, but some simple old-fashioned brainstorming is a great start. You can up your game if you combine that with a some mind mapping (with a whiteboard or a specialized tool).

Notes

- Seriously, it can be a battle to break through our unconscious biases and patterns of cognitive behavior. Some relevant cognitive biases: confirmation bias (the current plan, such as it is, is clearly pretty darned good) and survivorship bias (the current plan, even though it is arbitrary, is clearly pretty darned good because it's what we have). See also the books Black Box Thinking and Being Wrong.

- It's impossible to make a perfect plan; most test work is experimental and exploratory. If you can get feedback from stakeholders and partners that your decisions are good, that is gold! And if you get feedback that your decisions are not quite right, with suggestions for corrections, that's gold too! The attempt to flesh out those unknown unknowns works as a strawman to generate responses against.

- The arguments go something like this: "look at all these things to test that are too low priority for us to tackle right now; if you (my manager) feel that these need to be addressed now, then we need more resources or you need to de-prioritize other work." Or, "If you (my manager) are setting this tight schedule for testing, you will have to think hard about what you don't want us to test."